One of the benefits of using immutable infrastructure is that it allows you to do a canary testing of your infrastructure. That is - you provision new version of your infrastructure components, deploy your services and then route small percentage of the traffic towards new infrastructure, monitor how apps work under the new infra and eventually switch all traffic to new infrastructure.

To implement canary traffic orchestration, such products as Azure Traffic Manager with weighted traffic-routing method or Azure Front Door with weighted traffic-routing method are normally used. But what if you use Azure API Management in front of your services? How can you distribute traffic between services running at the current and vNext versions of your infrastructure?

Use-case description

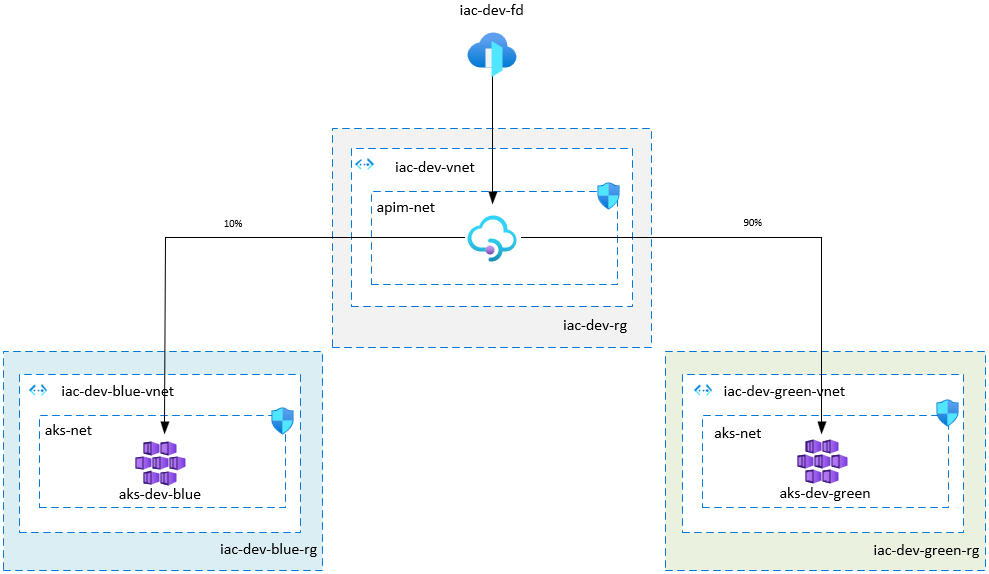

Let’s consider the following hypothetical infrastructure setup.

- We use Azure Front Door in front of APIM

- APIM instance is deployed to private VNet with external access type. That means that APIM gateway is accessible from the public internet and gateway can access resources within the virtual network

- We don’t want direct access to APIM from internet, therefore APIM Network Security Group is configured to only accept traffic from Azure Front Door

- AKS is deployed to private VNet peered with APIM Vnet

aks-netNSG configured to only accept traffic fromapim-netsubnet- We use AKS nginx private ingress controller

- We use

blue/greenconvention to mark our infrastructure versions - Normally, there is only one version of AKS cluster is active, but when we introduce some infrastructure changes (for example, we want to upgrade AKS cluster to the newer version, or change AKS VM size or do some other AKS related changes), we have 2 active AKS clusters

APIM policy configuration

APIM policies - a collection of Statements that are executed sequentially on the request or response of an API. Popular Statements include format conversion from XML to JSON and call rate limiting to restrict the amount of incoming calls. Here is the full policy reference index.

To implement canary flow orchestration between 2 AKS clusters, we can use control flow and set backend service policies.

Here is the example of API level policy for API named api-b. Assuming that api-b backend service is accessible via the following AKS endpoints:

- http://10.2.15.10/api-b/ at

aks-dev-blue - http://10.3.15.10/api-b/ at

aks-dev-green

<policies>

<inbound>

<base />

<choose>

<when condition="@(new Random().Next(100) < {{canaryPercent}})">

<set-backend-service base-url="http://{{aksHostCanary}}/api-b/" />

</when>

<otherwise>

<set-backend-service base-url="http://{{aksHost}}/api-b/" />

</otherwise>

</choose>

</inbound>

...

</policies>

We use APIM named values to store our configurable variables:

canaryPercentcontains the percentage (value from 0 to 100) of the requests we want to send to canary clusteraksHostcontains AKS ingress controller private IP address (10.2.15.10) of current AKS cluster (in our use-case,aks-dev-green)aksHostCanarycontains AKS ingress controller private IP address (10.3.15.10) of next version of AKS cluster (in our use-case,aks-dev-blue)

This approach works fine for one or two APIs, but if there are hundreds of APIs under APIM, that might be a bit too much XML “noise” in every API policy and duplication of canary orchestration logic all over the place. To solve this, we can move canary logic to Global level policy, put the result of canary logic into the variable aksUrl using set-variable policy and then use this variable at set-backend-service policy at API level policy.

Global level policy

<policies>

<inbound>

<choose>

<when condition="@(new Random().Next(100) < {{canaryPercent}})">

<set-variable name="aksUrl" value="http://{{aksHostCanary}}" />

</when>

<otherwise>

<set-variable name="aksUrl" value="http://{{aksHost}}" />

</otherwise>

</choose>

</inbound>

...

</policies>

api-b API level policy

<policies>

<inbound>

<base />

<set-backend-service base-url="@(context.Variables.GetValueOrDefault<string>("aksUrl") + "/api-b/")" />

</inbound>

...

</policies>

This way, we keep the canary “business” logic in one place (Global level policy) and if we need to change this logic, the change will be done at one place only.

Switching scenario

With this setup in place, here is the typical switch scenario:

- The current environment is

blue. APIM named values state:

aksHost= 10.2.15.10canaryPercent= 0

- We provision

greenenvironment and want to send 10% of the traffic togreen. APIM named values state:

aksHost= 10.2.15.10aksCanaryHost= 10.3.15.10canaryPercent= 10

- We want to increase canary traffic to 50%. APIM named values state:

aksHost= 10.2.15.10aksCanaryHost= 10.3.15.10canaryPercent= 50

- Everything looks good and we want 100% of the traffic go to

green. APIM named values state:

aksHost= 10.3.15.10canaryPercent= 0

At that moment green is our active environment and we can decommission blue.

Useful links

- Azure Traffic Manager

- Azure Traffic Manager weighted traffic-routing method

- Azure Front Door

- Azure Front Door weighted traffic-routing method

- APIM control flow policy

- APIM set variable policy

- APIM set backend service policy

- APIM named value

- Network Security Group

- Create an ingress controller to an internal virtual network in Azure Kubernetes Service (AKS)

- How to use Azure API Management with virtual networks

If you have any issues/comments/suggestions related to this post, you can reach out to me at evgeny.borzenin@gmail.com.

With that - thanks for reading!